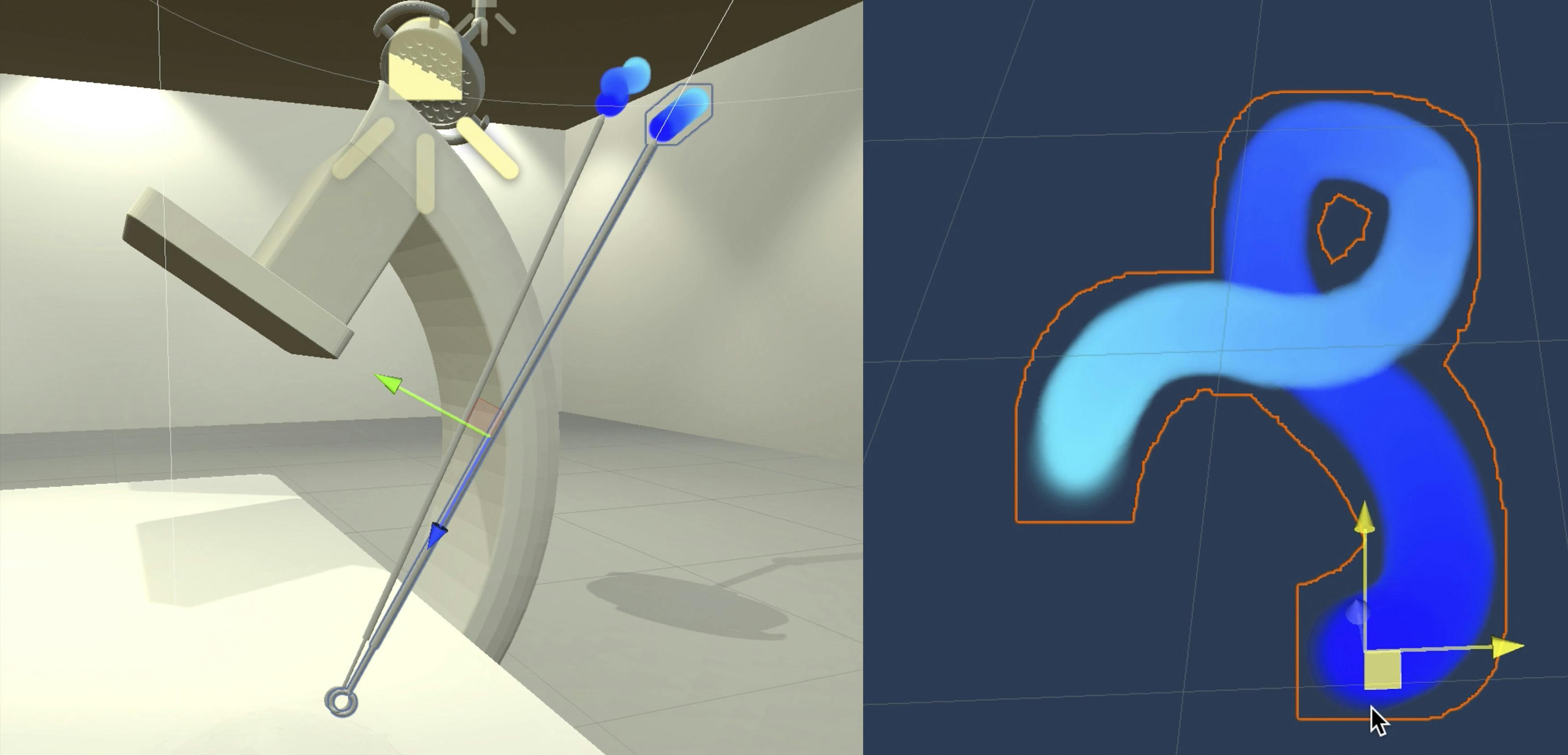

Comparison of motion data in VR

“Advanced Project course in Interactive Media Technology” at KTH

This project explored the accuracy of spatio-temporal distance perception during a surgical procedure in Virtual Reality.

Background & Research Focus

While previous studies have examined depth perception in extrapersonal space, perception in peripersonal space remains less understood, with contradicting findings. Our research aimed to compare the perception of spatio-temporal distances using three types of motion data:

- Raw motion capture data

- Averaged, filtered motion capture data

- Synthetic motion data

To investigate these differences, participants wore a VR headset to observe a surgical catheter moving through a transparent plane. Their depth perception was assessed via questionnaires during and after the study. Our findings showed no significant difference in spatio-temporal distance perception between motion types and an overall overestimation of distances in personal space within VR.

My Contributions

- Synthesized movement data – Implemented and refined synthetic motion data for comparison in the study.

- Motion trace solution – Developed a system to visualize the catheter's movement over time, though ultimately unused due to study design changes (see the blue traces in one of the images).

- Project video – Created a video summarizing the research and findings.

This project combined development, research, and presentation, offering insights into human depth perception in VR-based surgical environments.

Other team members: Kári Steinn Aðalsteinsson, Larissa Wagnerberger and Ólafur Konráð Albertsson.